Building a better Kindle (or, Why Buttons Matter)

Do you ever read something that feels like it was written just for you? That’s how I feel whenever Craig Mod writes about digital reading. His latest essay, “Reconsidering the Hardware Kindle Interface,” doesn’t have a title that pops unless you 1) love reading; 2) know that Craig is really good at making design talk exciting and accessible.

The big, simple, so obvious that it seems trite to point it out statement here is that hardware buttons on e-readers are good and important. When your primary mode of interaction is to do one or two things over and over again, hardware buttons are really smart and valuable. I’ll let Craig explain why:

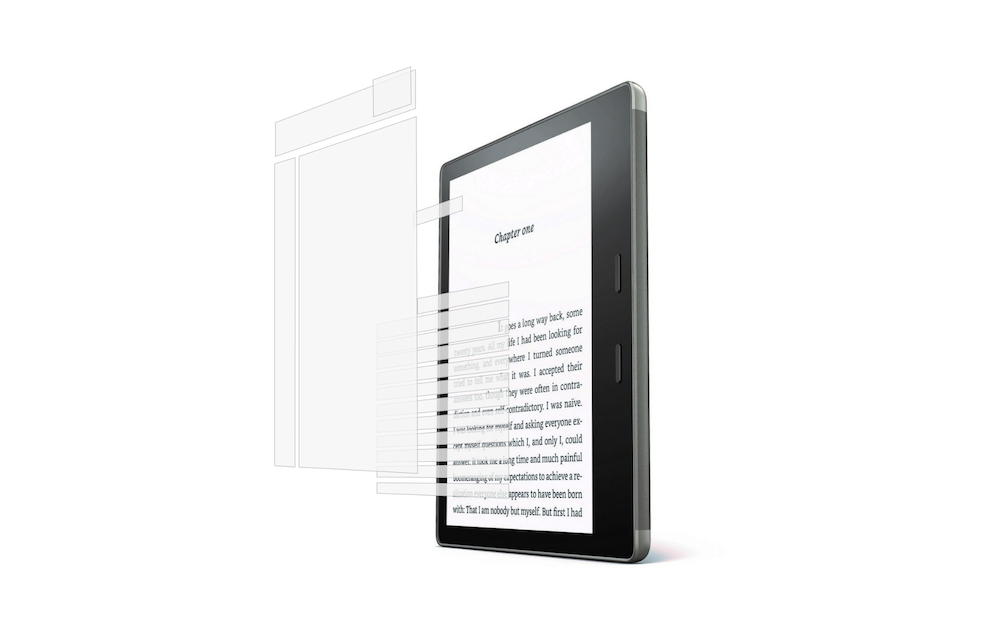

Hardware buttons inextricably tie you to a specific interaction model. So for the iPhone to be a flexible container into which anything can be poured it makes most sense to have (almost) no hardware controls.

But the hardware Kindle? Oh, what a wonderful gift for Amazon designers. The Kindle is predictable! We know what we’re getting on almost every page. And the actions of the user are so strictly defined — turn page, highlight, go back to library — that you can build in hardware buttons to do a lot of heavy lifting. And yet! Amazon seems to ignore (to lesser and greater degrees depending on the device) how predictable a hardware Kindle is.

Specifically, dedicated hardware buttons mean that you can remove the amount of unpredictability that happens when you touch the screen. Touching the screen now means “I’m going to interact with the content.”

What benefit comes of making the content of the book a first class object? It removes the brittleness of the current interaction model. Currently —when you tap — you might invoke a menu, a page turn, a bookmark, or a highlight. Meta actions are on a layer above content interactions. A Kindle is just a content container. And so this feels upside down.

Touchscreens work best when they allow direct and explicit engagement with the objects on the screen.

If the content of the book was the only screen object, a tap on a word would instantly bring up the dictionary. A drag would highlight. A single tap on an image would zoom in. Suddenly the text is alive and present. Your interaction with it? Thoughtless. Confident. No false taps. No accidental page turns. No accidental bookmarks. This further simplifies the logic of the touch engine watching for taps in the background, making these interactions faster, programmatic logic simpler.

Doesn’t it just sound like a goddamn delight?

Stay Connected