Solve trolley problem scenarios with MIT’s Moral Machine

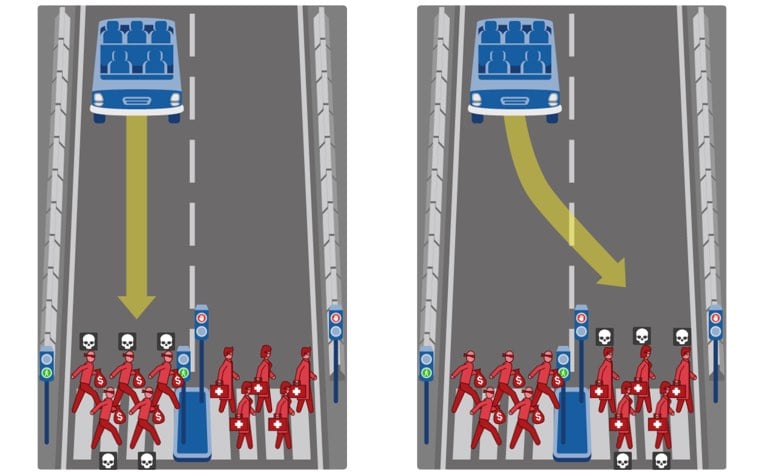

A group at MIT built an app called the Moral Machine, “a platform for gathering a human perspective on moral decisions made by machine intelligence, such as self-driving cars”. They want to see how humans solve trolley problem scenarios.

We show you moral dilemmas, where a driverless car must choose the lesser of two evils, such as killing two passengers or five pedestrians. As an outside observer, you judge which outcome you think is more acceptable. You can then see how your responses compare with those of other people.

That was a really uncomfortable exercise…at the end, you’re given a “Most Killed Character” result. Pretty early on, my strategy became mostly to kill the people in the car because they should absorb the risk of the situation. The trolley problem may end up not being such a big deal, but I hope that the makers of these machines take care1 in building them with thoughtfulness.

Uber’s self-driving cars terrify me. The company has shown little thoughtfulness and regard for actual humans in its short history, so why would their self-driving car be any different? Their solution to the trolley problem would likely involve a flash bidding war between personal AIs as to who lives. Sorry, the rich white passenger outbid the four poor kids crossing the street! ↩

Stay Connected